Which of the Following Protocols Can Tls Use for Key Exchange

Send Layer Security (TLS)

Networking 101, Chapter 4

Introduction

The SSL protocol was originally adult at Netscape to enable ecommerce transaction security on the Spider web, which required encryption to protect customers' personal data, also as authentication and integrity guarantees to ensure a safe transaction. To achieve this, the SSL protocol was implemented at the application layer, straight on top of TCP (Figure four-ane), enabling protocols above it (HTTP, electronic mail, instant messaging, and many others) to operate unchanged while providing communication security when communicating across the network.

When SSL is used correctly, a third-party observer can only infer the connexion endpoints, type of encryption, as well as the frequency and an approximate amount of information sent, but cannot read or modify any of the actual information.

When the SSL protocol was standardized by the IETF, information technology was renamed to Transport Layer Security (TLS). Many use the TLS and SSL names interchangeably, but technically, they are different, since each describes a different version of the protocol.

SSL 2.0 was the first publicly released version of the protocol, only information technology was speedily replaced by SSL three.0 due to a number of discovered security flaws. Because the SSL protocol was proprietary to Netscape, the IETF formed an endeavor to standardize the protocol, resulting in RFC 2246, which was published in Jan 1999 and became known equally TLS one.0. Since then, the IETF has continued iterating on the protocol to accost security flaws, likewise as to extend its capabilities: TLS 1.i (RFC 4346) was published in April 2006, TLS 1.2 (RFC 5246) in Baronial 2008, and work is now underway to ascertain TLS ane.3.

That said, don't let the affluence of versions numbers mislead you: your servers should always prefer and negotiate the latest stable version of the TLS protocol to ensure the best security, capability, and functioning guarantees. In fact, some operation-critical features, such as HTTP/2, explicitly crave the use of TLS one.2 or higher and will abort the connexion otherwise. Good security and performance get mitt in manus.

TLS was designed to operate on top of a reliable transport protocol such as TCP. Yet, it has also been adapted to run over datagram protocols such every bit UDP. The Datagram Transport Layer Security (DTLS) protocol, defined in RFC 6347, is based on the TLS protocol and is able to provide similar security guarantees while preserving the datagram delivery model.

§Encryption, Authentication, and Integrity

The TLS protocol is designed to provide iii essential services to all applications running above information technology: encryption, authentication, and data integrity. Technically, y'all are not required to utilise all three in every state of affairs. You may decide to have a certificate without validating its authenticity, but you lot should exist well enlightened of the security risks and implications of doing so. In practice, a secure web application will leverage all three services.

- Encryption

-

A mechanism to obfuscate what is sent from ane host to some other.

- Authentication

-

A mechanism to verify the validity of provided identification material.

- Integrity

-

A mechanism to detect message tampering and forgery.

In order to found a cryptographically secure data channel, the connection peers must agree on which ciphersuites will be used and the keys used to encrypt the data. The TLS protocol specifies a well-defined handshake sequence to perform this exchange, which we volition examine in detail in TLS Handshake. The ingenious office of this handshake, and the reason TLS works in exercise, is due to its use of public cardinal cryptography (besides known as asymmetric key cryptography), which allows the peers to negotiate a shared secret key without having to establish whatever prior cognition of each other, and to do and so over an unencrypted channel.

As part of the TLS handshake, the protocol also allows both peers to authenticate their identity. When used in the browser, this authentication machinery allows the customer to verify that the server is who it claims to be (e.g., your depository financial institution) and non someone simply pretending to be the destination by spoofing its name or IP address. This verification is based on the established chain of trust — see Chain of Trust and Certificate Authorities. In addition, the server can too optionally verify the identity of the client — due east.g., a visitor proxy server can authenticate all employees, each of whom could have their own unique document signed by the company.

Finally, with encryption and authentication in place, the TLS protocol also provides its own message framing mechanism and signs each bulletin with a message authentication code (MAC). The MAC algorithm is a one-way cryptographic hash function (effectively a checksum), the keys to which are negotiated by both connection peers. Whenever a TLS record is sent, a MAC value is generated and appended for that message, and the receiver is and so able to compute and verify the sent MAC value to ensure bulletin integrity and authenticity.

Combined, all three mechanisms serve as a foundation for secure communication on the Spider web. All mod web browsers provide support for a variety of ciphersuites, are able to authenticate both the client and server, and transparently perform message integrity checks for every record.

§HTTPS Everywhere

Unencrypted communication—via HTTP and other protocols—creates a large number of privacy, security, and integrity vulnerabilities. Such exchanges are susceptible to interception, manipulation, and impersonation, and can reveal users credentials, history, identity, and other sensitive information. Our applications need to protect themselves, and our users, against these threats by delivering data over HTTPS.

- HTTPS protects the integrity of the website

-

Encryption prevents intruders from tampering with exchanged data—due east.g. rewriting content, injecting unwanted and malicious content, and and so on.

- HTTPS protects the privacy and security of the user

-

Encryption prevents intruders from listening in on the exchanged data. Each unprotected asking can reveal sensitive information nigh the user, and when such information is aggregated beyond many sessions, can exist used to de-anonymize their identities and reveal other sensitive data. All browsing action, every bit far every bit the user is concerned, should be considered private and sensitive.

- HTTPS enables powerful features on the web

-

A growing number of new web platform features, such as accessing users geolocation, taking pictures, recording video, enabling offline app experiences, and more, crave explicit user opt-in that, in turn, requires HTTPS. The security and integrity guarantees provided past HTTPS are critical components for delivering a secure user permission workflow and protecting their preferences.

To further the point, both the Cyberspace Engineering science Task Force (IETF) and the Internet Compages Lath (IAB) have issued guidance to developers and protocol designers that strongly encourages adoption of HTTPS:

-

IETF: Pervasive Monitoring Is an Set on

-

IAB: Statement on Internet Confidentiality

As our dependency on the Internet has grown, so have the risks and the stakes for everyone that is relying on it. As a outcome, information technology is our responsibility, both every bit the application developers and users, to ensure that we protect ourselves by enabling HTTPS everywhere.

The HTTPS-Merely Standard published by the White House's Office of Management and Budget is a swell resource for additional information on the demand for HTTPS, and hands-on communication for deploying it.

§TLS Handshake

Before the client and the server can brainstorm exchanging application data over TLS, the encrypted tunnel must exist negotiated: the client and the server must agree on the version of the TLS protocol, cull the ciphersuite, and verify certificates if necessary. Unfortunately, each of these steps requires new packet roundtrips (Figure 4-2) between the client and the server, which adds startup latency to all TLS connections.

Figure iv-two assumes the same (optimistic) 28 millisecond one-way "light in fiber" delay between New York and London as used in previous TCP connectedness establishment examples; come across Tabular array 1-1.

-

0 ms -

TLS runs over a reliable transport (TCP), which means that we must first complete the TCP 3-way handshake, which takes one full roundtrip.

-

56 ms -

With the TCP connection in identify, the customer sends a number of specifications in patently text, such every bit the version of the TLS protocol it is running, the list of supported ciphersuites, and other TLS options it may want to utilise.

-

84 ms -

The server picks the TLS protocol version for further communication, decides on a ciphersuite from the listing provided by the client, attaches its certificate, and sends the response back to the customer. Optionally, the server can too send a request for the customer's certificate and parameters for other TLS extensions.

-

112 ms -

Assuming both sides are able to negotiate a mutual version and cipher, and the client is happy with the certificate provided by the server, the client initiates either the RSA or the Diffie-Hellman central exchange, which is used to establish the symmetric key for the ensuing session.

-

140 ms -

The server processes the key exchange parameters sent past the customer, checks bulletin integrity by verifying the MAC, and returns an encrypted

Finishedbulletin back to the client. -

168 ms -

The client decrypts the message with the negotiated symmetric primal, verifies the MAC, and if all is well, and then the tunnel is established and awarding data can now be sent.

As the above exchange illustrates, new TLS connections require two roundtrips for a "full handshake"—that'due south the bad news. Nonetheless, in do, optimized deployments can practice much ameliorate and deliver a consequent 1-RTT TLS handshake:

-

False Offset is a TLS protocol extension that allows the client and server to start transmitting encrypted application data when the handshake is only partially complete—i.e., once

ChangeCipherSpecandFinishedletters are sent, only without waiting for the other side to do the same. This optimization reduces handshake overhead for new TLS connections to ane roundtrip; see Enable TLS Imitation Outset. -

If the client has previously communicated with the server, an "abbreviated handshake" can be used, which requires i roundtrip and also allows the client and server to reduce the CPU overhead past reusing the previously negotiated parameters for the secure session; see TLS Session Resumption.

The combination of both of the higher up optimizations allows united states of america to evangelize a consistent 1-RTT TLS handshake for new and returning visitors, plus computational savings for sessions that tin can exist resumed based on previously negotiated session parameters. Make sure to take advantage of these optimizations in your deployments.

One of the design goals for TLS 1.3 is to reduce the latency overhead for setting up the secure connexion: i-RTT for new, and 0-RTT for resumed sessions!

§RSA, Diffie-Hellman and Frontward Secrecy

Due to a diverseness of historical and commercial reasons the RSA handshake has been the dominant cardinal substitution machinery in most TLS deployments: the customer generates a symmetric central, encrypts it with the server's public key, and sends it to the server to use equally the symmetric cardinal for the established session. In turn, the server uses its private cardinal to decrypt the sent symmetric key and the fundamental-exchange is complete. From this betoken forward the customer and server utilise the negotiated symmetric key to encrypt their session.

The RSA handshake works, but has a critical weakness: the same public-private key pair is used both to cosign the server and to encrypt the symmetric session key sent to the server. As a result, if an assaulter gains admission to the server's private primal and listens in on the exchange, and then they can decrypt the the entire session. Worse, even if an attacker does not currently have admission to the private cardinal, they can even so record the encrypted session and decrypt it at a later time in one case they obtain the private key.

By contrast, the Diffie-Hellman key exchange allows the client and server to negotiate a shared secret without explicitly communicating information technology in the handshake: the server'due south individual fundamental is used to sign and verify the handshake, but the established symmetric key never leaves the customer or server and cannot be intercepted by a passive attacker even if they have access to the private key.

For the curious, the Wikipedia article on Diffie-Hellman key commutation is a keen place to learn almost the algorithm and its backdrop.

All-time of all, Diffie-Hellman key substitution can be used to reduce the risk of compromise of past communication sessions: we can generate a new "imperceptible" symmetric primal every bit part of each and every key commutation and discard the previous keys. As a upshot, because the imperceptible keys are never communicated and are actively renegotiated for each the new session, the worst-case scenario is that an attacker could compromise the client or server and admission the session keys of the current and future sessions. However, knowing the individual key, or the current ephemeral cardinal, does not help the aggressor decrypt any of the previous sessions!

Combined, the use of Diffie-Hellman key exchange and imperceptible sessions keys enables "perfect forward secrecy" (PFS): the compromise of long-term keys (e.one thousand. server'due south private key) does non compromise past session keys and does non allow the attacker to decrypt previously recorded sessions. A highly desirable property, to say the least!

As a result, and this should not come as a surprise, the RSA handshake is at present being actively phased out: all the popular browsers adopt ciphers that enable forward secrecy (i.eastward., rely on Diffie-Hellman cardinal exchange), and as an additional incentive, may enable certain protocol optimizations only when forrad secrecy is available—east.g. i-RTT handshakes via TLS False Commencement.

Which is to say, consult your server documentation on how to enable and deploy forward secrecy! Over again, good security and performance become hand in manus.

§Application Layer Protocol Negotiation (ALPN)

Ii network peers may want to utilize a custom awarding protocol to communicate with each other. Ane way to resolve this is to determine the protocol upfront, assign a well-known port to information technology (east.g., port 80 for HTTP, port 443 for TLS), and configure all clients and servers to apply information technology. However, in practice, this is a ho-hum and impractical process: each port assignment must be approved and, worse, firewalls and other intermediaries often let traffic only on ports 80 and 443.

As a result, to enable piece of cake deployment of custom protocols, we must reuse ports 80 or 443 and apply an boosted mechanism to negotiate the awarding protocol. Port eighty is reserved for HTTP, and the HTTP specification provides a special Upgrade flow for this very purpose. Even so, the employ of Upgrade tin add an extra network roundtrip of latency, and in exercise is often unreliable in the presence of many intermediaries; run into Proxies, Intermediaries, TLS, and New Protocols on the Spider web.

For a hands-on example of HTTP Upgrade workflow, flip ahead to Upgrading to HTTP/2.

The solution is, yous guessed it, to utilise port 443, which is reserved for secure HTTPS sessions running over TLS. The utilise of an stop-to-cease encrypted tunnel obfuscates the data from intermediate proxies and enables a quick and reliable mode to deploy new application protocols. Still, nosotros still need another mechanism to negotiate the protocol that will be used within the TLS session.

Application Layer Protocol Negotiation (ALPN), as the name implies, is a TLS extension that addresses this demand. It extends the TLS handshake (Effigy 4-2) and allows the peers to negotiate protocols without additional roundtrips. Specifically, the process is every bit follows:

-

The client appends a new

ProtocolNameListfield, containing the list of supported application protocols, into theClientHellomessage. -

The server inspects the

ProtocolNameListfield and returns aProtocolNamefield indicating the selected protocol equally function of theServerHellobulletin.

The server may respond with merely a single protocol name, and if it does non back up whatsoever that the client requests, then information technology may choose to abort the connexion. As a event, one time the TLS handshake is finished, both the secure tunnel is established, and the client and server are in understanding equally to which awarding protocol will be used; the customer and server can immediately begin exchanging letters via the negotiated protocol.

§Server Name Indication (SNI)

An encrypted TLS tunnel can be established between whatsoever two TCP peers: the client only needs to know the IP accost of the other peer to make the connection and perform the TLS handshake. However, what if the server wants to host multiple contained sites, each with its ain TLS certificate, on the same IP address — how does that piece of work? Play a trick on question; it doesn't.

To accost the preceding problem, the Server Name Indication (SNI) extension was introduced to the TLS protocol, which allows the client to indicate the hostname the client is attempting to connect to every bit part of the TLS handshake. In plough, the server is able to inspect the SNI hostname sent in the ClientHello message, select the appropriate certificate, and consummate the TLS handshake for the desired host.

§TLS Session Resumption

The extra latency and computational costs of the full TLS handshake impose a serious performance penalty on all applications that crave secure communication. To assistance mitigate some of the costs, TLS provides a mechanism to resume or share the aforementioned negotiated secret fundamental data betwixt multiple connections.

§Session Identifiers

The outset Session Identifiers (RFC 5246) resumption mechanism was introduced in SSL ii.0, which allowed the server to create and send a 32-byte session identifier as part of its ServerHello message during the total TLS negotiation we saw earlier. With the session ID in place, both the client and server can shop the previously negotiated session parameters—keyed by session ID—and reuse them for a subsequent session.

Specifically, the client can include the session ID in the ClientHello bulletin to indicate to the server that it yet remembers the negotiated cipher suite and keys from previous handshake and is able to reuse them. In turn, if the server is able to find the session parameters associated with the advertised ID in its cache, then an abbreviated handshake (Figure 4-three) can take place. Otherwise, a full new session negotiation is required, which will generate a new session ID.

Leveraging session identifiers allows us to remove a full roundtrip, as well as the overhead of public key cryptography, which is used to negotiate the shared cloak-and-dagger key. This allows a secure connection to be established apace and with no loss of security, since we are reusing the previously negotiated session information.

Session resumption is an important optimization both for HTTP/one.ten and HTTP/2 deployments. The abbreviated handshake eliminates a total roundtrip of latency and significantly reduces computational costs for both sides.

In fact, if the browser requires multiple connections to the same host (due east.g. when HTTP/ane.x is in utilise), it volition oft intentionally wait for the first TLS negotiation to complete earlier opening additional connections to the aforementioned server, such that they tin be "resumed" and reuse the same session parameters. If you've always looked at a network trace and wondered why you rarely see multiple aforementioned-host TLS negotiations in flight, that's why!

However, one of the practical limitations of the Session Identifiers machinery is the requirement for the server to create and maintain a session cache for every client. This results in several problems on the server, which may meet tens of thousands or even millions of unique connections every day: consumed retention for every open up TLS connection, a requirement for a session ID cache and eviction policies, and nontrivial deployment challenges for popular sites with many servers, which should, ideally, apply a shared TLS session enshroud for best performance.

None of the preceding issues are impossible to solve, and many high-traffic sites are using session identifiers successfully today. But for whatever multi-server deployment, session identifiers will require some careful thinking and systems architecture to ensure a well operating session enshroud.

§Session Tickets

To accost this business organisation for server-side deployment of TLS session caches, the "Session Ticket" (RFC 5077) replacement mechanism was introduced, which removes the requirement for the server to continue per-client session state. Instead, if the client indicates that information technology supports session tickets, the server can include a New Session Ticket tape, which includes all of the negotiated session data encrypted with a hugger-mugger key known only by the server.

This session ticket is then stored by the client and can be included in the SessionTicket extension within the ClientHello message of a subsequent session. Thus, all session data is stored only on the customer, but the ticket is however safe considering it is encrypted with a central known but past the server.

The session identifiers and session ticket mechanisms are respectively ordinarily referred to as session caching and stateless resumption mechanisms. The main improvement of stateless resumption is the removal of the server-side session cache, which simplifies deployment by requiring that the client provide the session ticket on every new connection to the server—that is, until the ticket has expired.

In practice, deploying session tickets across a set of load-balanced servers as well requires some conscientious thinking and systems compages: all servers must be initialized with the same session key, and an additional mechanism is required to periodically and deeply rotate the shared primal across all servers.

Authentication is an integral part of establishing every TLS connection. After all, information technology is possible to carry out a chat over an encrypted tunnel with whatever peer, including an attacker, and unless nosotros can be sure that the host nosotros are speaking to is the one we trust, then all the encryption work could be for nothing. To empathize how we can verify the peer'south identity, let's examine a uncomplicated authentication workflow between Alice and Bob:

-

Both Alice and Bob generate their own public and individual keys.

-

Both Alice and Bob hide their respective individual keys.

-

Alice shares her public key with Bob, and Bob shares his with Alice.

-

Alice generates a new bulletin for Bob and signs it with her private key.

-

Bob uses Alice's public key to verify the provided bulletin signature.

Trust is a key component of the preceding commutation. Specifically, public key encryption allows us to employ the public primal of the sender to verify that the message was signed with the right private fundamental, but the decision to corroborate the sender is still one that is based on trust. In the exchange merely shown, Alice and Bob could accept exchanged their public keys when they met in person, and considering they know each other well, they are certain that their commutation was not compromised past an impostor—peradventure they even verified their identities through some other, secret (physical) handshake they had established earlier!

Side by side, Alice receives a bulletin from Charlie, whom she has never met, but who claims to be a friend of Bob'south. In fact, to prove that he is friends with Bob, Charlie asked Bob to sign his own public central with Bob'due south private key and attached this signature with his message (Figure four-4). In this case, Alice get-go checks Bob'southward signature of Charlie's fundamental. She knows Bob's public key and is thus able to verify that Bob did indeed sign Charlie's key. Because she trusts Bob's conclusion to verify Charlie, she accepts the message and performs a like integrity cheque on Charlie's bulletin to ensure that it is, indeed, from Charlie.

What nosotros have just done is established a chain of trust: Alice trusts Bob, Bob trusts Charlie, and past transitive trust, Alice decides to trust Charlie. Equally long equally nobody in the chain is compromised, this allows us to build and grow the listing of trusted parties.

Authentication on the Web and in your browser follows the exact same procedure equally shown. Which means that at this point you should be request: whom does your browser trust, and whom do you lot trust when you employ the browser? In that location are at least 3 answers to this question:

- Manually specified certificates

-

Every browser and operating system provides a mechanism for you to manually import whatsoever certificate you trust. How you obtain the document and verify its integrity is completely up to you.

- Certificate authorities

-

A certificate dominance (CA) is a trusted 3rd political party that is trusted by both the subject area (owner) of the certificate and the political party relying upon the document.

- The browser and the operating organisation

-

Every operating organization and most browsers ship with a list of well-known certificate regime. Thus, you lot as well trust the vendors of this software to provide and maintain a listing of trusted parties.

In do, it would be impractical to store and manually verify each and every primal for every website (although you tin, if you lot are then inclined). Hence, the most mutual solution is to utilise certificate authorities (CAs) to exercise this job for us (Figure 4-5): the browser specifies which CAs to trust (root CAs), and the burden is then on the CAs to verify each site they sign, and to inspect and verify that these certificates are non misused or compromised. If the security of any site with the CA's document is breached, then information technology is also the responsibility of that CA to revoke the compromised certificate.

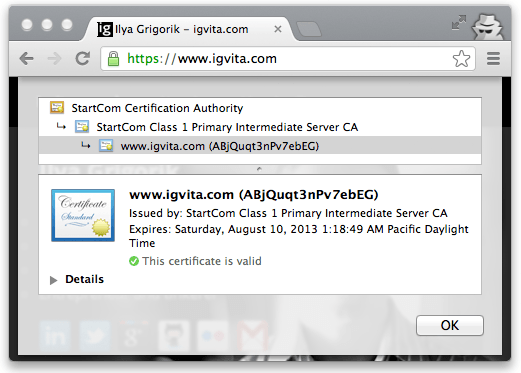

Every browser allows you to audit the chain of trust of your secure connexion (Figure 4-vi), usually accessible by clicking on the lock icon abreast the URL.

-

igvita.com certificate is signed by StartCom Class 1 Master Intermediate Server.

-

StartCom Class 1 Primary Intermediate Server certificate is signed past the StartCom Certification Authority.

-

StartCom Certification Authorisation is a recognized root certificate say-so.

The "trust anchor" for the entire chain is the root certificate authority, which in the case just shown, is the StartCom Certification Say-so. Every browser ships with a pre-initialized list of trusted certificate regime ("roots"), and in this case, the browser trusts and is able to verify the StartCom root certificate. Hence, through a transitive chain of trust in the browser, the browser vendor, and the StartCom certificate potency, we extend the trust to our destination site.

§Certificate Revocation

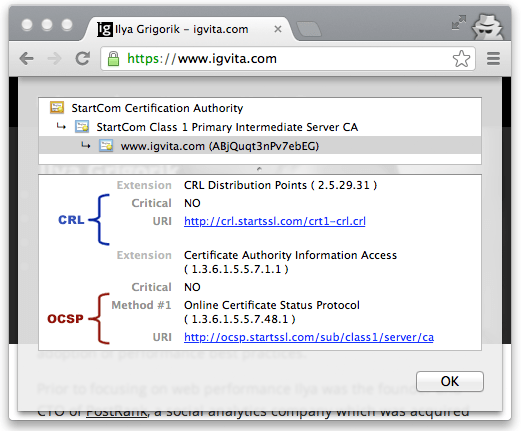

Occasionally the issuer of a certificate volition demand to revoke or invalidate the certificate due to a number of possible reasons: the private key of the certificate has been compromised, the document authorisation itself has been compromised, or due to a variety of more than beneficial reasons such equally a superseding certificate, alter in affiliation, and so on. To accost this, the certificates themselves contain instructions (Figure four-7) on how to check if they have been revoked. Hence, to ensure that the chain of trust is not compromised, each peer can bank check the condition of each certificate past following the embedded instructions, along with the signatures, as it verifies the document chain.

§Certificate Revocation List (CRL)

Certificate Revocation Listing (CRL) is defined by RFC 5280 and specifies a simple mechanism to check the status of every certificate: each certificate dominance maintains and periodically publishes a list of revoked certificate series numbers. Anyone attempting to verify a certificate is then able to download the revocation listing, cache it, and check the presence of a particular series number within it—if it is present, and so it has been revoked.

This process is simple and straightforward, simply it has a number of limitations:

-

The growing number of revocations means that the CRL list will simply become longer, and each client must retrieve the entire list of serial numbers.

-

At that place is no machinery for instant notification of document revocation—if the CRL was buried past the client before the certificate was revoked, and then the CRL will deem the revoked certificate valid until the cache expires.

-

The need to fetch the latest CRL listing from the CA may block certificate verification, which can add together meaning latency to the TLS handshake.

-

The CRL fetch may fail due to variety of reasons, and in such cases the browser behavior is undefined. Most browsers treat such cases as "soft neglect", assuasive the verification to go along—yes, yikes.

§Online Document Status Protocol (OCSP)

To address some of the limitations of the CRL mechanism, the Online Certificate Status Protocol (OCSP) was introduced by RFC 2560, which provides a mechanism to perform a real-time cheque for status of the certificate. Different the CRL file, which contains all the revoked series numbers, OCSP allows the client to query the CA'south certificate database straight for just the series number in question while validating the certificate chain.

Every bit a result, the OCSP machinery consumes less bandwidth and is able to provide real-time validation. However, the requirement to perform existent-time OCSP queries creates its own set of issues:

-

The CA must exist able to handle the load of the real-fourth dimension queries.

-

The CA must ensure that the service is up and globally bachelor at all times.

-

Real-fourth dimension OCSP requests may impair the client's privacy considering the CA knows which sites the client is visiting.

-

The customer must block on OCSP requests while validating the certificate chain.

-

The browser behavior is, one time once more, undefined and typically results in a "soft fail" if the OCSP fetch fails due to a network timeout or other errors.

As a real-world data point, Firefox telemetry shows that OCSP requests time out as much as 15% of the time, and add approximately 350 ms to the TLS handshake when successful—see hpbn.co/ocsp-performance.

§OCSP Stapling

For the reasons listed above, neither CRL or OSCP revocation mechanisms offering the security and performance guarantees that we desire for our applications. Nonetheless, don't despair, because OCSP stapling (RFC 6066, "Certificate Status Request" extension) addresses most of the issues nosotros saw before by allowing the validation to be performed past the server and exist sent ("stapled") as part of the TLS handshake to the client:

-

Instead of the client making the OCSP request, information technology is the server that periodically retrieves the signed and timestamped OCSP response from the CA.

-

The server then appends (i.e. "staples") the signed OCSP response equally part of the TLS handshake, allowing the client to validate both the certificate and the attached OCSP revocation record signed by the CA.

This role reversal is secure, because the stapled record is signed past the CA and tin can be verified past the customer, and offers a number of important benefits:

-

The client does not leak its navigation history.

-

The customer does not accept to block and query the OCSP server.

-

The client may "hard-fail" revocation handling if the server opts-in (by advertising the OSCP "Must-Staple" flag) and the verification fails.

In brusque, to get both the best security and performance guarantees, make certain to configure and test OCSP stapling on your servers.

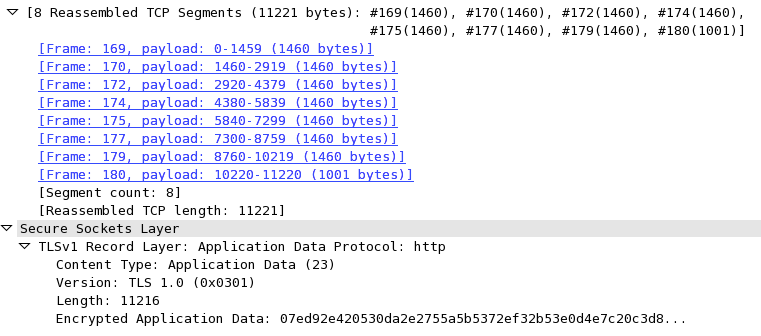

§TLS Tape Protocol

Non different the IP or TCP layers beneath information technology, all data exchanged within a TLS session is also framed using a well-divers protocol (Figure 4-eight). The TLS Record protocol is responsible for identifying dissimilar types of messages (handshake, alert, or data via the "Content Type" field), besides as securing and verifying the integrity of each bulletin.

A typical workflow for delivering application data is as follows:

-

Record protocol receives application data.

-

Received data is divided into blocks: maximum of 2xiv bytes, or sixteen KB per tape.

-

Message authentication code (MAC) or HMAC is added to each record.

-

Information within each tape is encrypted using the negotiated cipher.

One time these steps are complete, the encrypted data is passed downwardly to the TCP layer for transport. On the receiving cease, the same workflow, simply in reverse, is applied by the peer: decrypt tape using negotiated zippo, verify MAC, extract and deliver the data to the application above it.

The skilful news is that all the work just shown is handled by the TLS layer itself and is completely transparent to virtually applications. Nonetheless, the record protocol does introduce a few important implications that we need to be aware of:

-

Maximum TLS record size is sixteen KB

-

Each record contains a 5-byte header, a MAC (up to 20 bytes for SSLv3, TLS 1.0, TLS i.1, and up to 32 bytes for TLS 1.2), and padding if a block cipher is used.

-

To decrypt and verify the tape, the entire record must be available.

Picking the right tape size for your awarding, if you have the ability to practise and then, can exist an important optimization. Small records incur a larger CPU and byte overhead due to record framing and MAC verification, whereas large records will have to exist delivered and reassembled by the TCP layer earlier they tin be candy by the TLS layer and delivered to your application—skip ahead to Optimize TLS Record Size for full details.

§Optimizing for TLS

Deploying your awarding over TLS will crave some boosted piece of work, both inside your application (e.g. migrating resources to HTTPS to avoid mixed content), and on the configuration of the infrastructure responsible for delivering the application information over TLS. A well tuned deployment can brand an enormous positive difference in the observed performance, user feel, and overall operational costs. Permit's dive in.

§Reduce Computational Costs

Establishing and maintaining an encrypted channel introduces boosted computational costs for both peers. Specifically, start there is the asymmetric (public primal) encryption used during the TLS handshake (explained TLS Handshake). Then, one time a shared hugger-mugger is established, it is used as a symmetric key to encrypt all TLS records.

Every bit we noted earlier, public fundamental cryptography is more than computationally expensive when compared with symmetric primal cryptography, and in the early days of the Spider web oft required additional hardware to perform "SSL offloading." The practiced news is, this is no longer necessary and what once required dedicated hardware can now be washed direct on the CPU. Large organizations such as Facebook, Twitter, and Google, which offer TLS to billions of users, perform all the necessary TLS negotiation and computation in software and on article hardware.

In Jan this year (2010), Gmail switched to using HTTPS for everything by default. Previously it had been introduced as an option, but now all of our users utilize HTTPS to secure their e-mail between their browsers and Google, all the fourth dimension. In order to do this nosotros had to deploy no additional machines and no special hardware. On our production frontend machines, SSL/TLS accounts for less than 1% of the CPU load, less than ten KB of retentiveness per connection and less than ii% of network overhead. Many people believe that SSL/TLS takes a lot of CPU time and we hope the preceding numbers (public for the starting time fourth dimension) will help to dispel that.

If you cease reading now you just demand to remember one thing: SSL/TLS is not computationally expensive anymore.

Adam Langley (Google)

We have deployed TLS at a large calibration using both hardware and software load balancers. Nosotros have plant that mod software-based TLS implementations running on commodity CPUs are fast enough to handle heavy HTTPS traffic load without needing to resort to dedicated cryptographic hardware. We serve all of our HTTPS traffic using software running on commodity hardware.

Doug Beaver (Facebook)

Elliptic Curve Diffie-Hellman (ECDHE) is only a piffling more expensive than RSA for an equivalent security level… In practical deployment, we institute that enabling and prioritizing ECDHE zippo suites actually acquired negligible increment in CPU usage. HTTP keepalives and session resumption mean that most requests exercise not require a full handshake, so handshake operations do non dominate our CPU usage. We find 75% of Twitter'southward client requests are sent over connections established using ECDHE. The remaining 25% consists mostly of older clients that don't yet support the ECDHE cipher suites.

Jacob Hoffman-Andrews (Twitter)

To get the all-time results in your own deployments, make the best of TLS Session Resumption—deploy, measure, and optimize its success rate. Eliminating the need to perform the costly public central cryptography operations on every handshake will significantly reduce both the computational and latency costs of TLS; there is no reason to spend CPU cycles on work that you don't demand to exercise.

Speaking of optimizing CPU cycles, make certain to keep your servers upwardly to date with the latest version of the TLS libraries! In addition to the security improvements, you lot will also often meet performance benefits. Security and performance go hand-in-hand.

§Enable i-RTT TLS Handshakes

An unoptimized TLS deployment tin can easily add many additional roundtrips and introduce significant latency for the user—eastward.g. multi-RTT handshakes, tiresome and ineffective certificate revocation checks, large TLS records that crave multiple roundtrips, and so on. Don't exist that site, y'all tin can do much ameliorate.

A well-tuned TLS deployment should add at most one extra roundtrip for negotiating the TLS connection, regardless of whether it is new or resumed, and avert all other latency pitfalls: configure session resumption, and enable forward secrecy to enable TLS Fake Start.

To get the best finish-to-end performance, make sure to audit both ain and third-political party services and servers used past your application, including your CDN provider. For a quick study-card overview of popular servers and CDNs, cheque out istlsfastyet.com.

§Optimize Connection Reuse

The all-time way to minimize both latency and computational overhead of setting up new TCP+TLS connections is to optimize connection reuse. Doing so amortizes the setup costs across requests and delivers a much faster experience to the user.

Verify that your server and proxy configurations are setup to allow keepalive connections, and audit your connection timeout settings. Many pop servers fix ambitious connection timeouts (eastward.g. some Apache versions default to 5s timeouts) that forcefulness a lot of unnecessary renegotiations. For best results, use your logs and analytics to determine the optimal timeout values.

§Leverage Early Termination

As we discussed in Primer on Latency and Bandwidth, we may non exist able to brand our packets travel faster, simply we tin can make them travel a shorter altitude. By placing our "edge" servers closer to the user (Effigy 4-9), we tin significantly reduce the roundtrip times and the total costs of the TCP and TLS handshakes.

A simple fashion to attain this is to leverage the services of a content delivery network (CDN) that maintains pools of edge servers around the globe, or to deploy your own. By allowing the user to terminate their connectedness with a nearby server, instead of traversing across oceans and continental links to your origin, the customer gets the benefit of "early termination" with shorter roundtrips. This technique is equally useful and important for static and dynamic content: static content can as well be cached and served by the edge servers, whereas dynamic requests can be routed over established connections from the edge to origin.

§Configure Session Caching and Stateless Resumption

Terminating the connection closer to the user is an optimization that volition help decrease latency for your users in all cases, but once again, no bit is faster than a bit not sent—send fewer bits. Enabling TLS session caching and stateless resumption allows u.s.a. to eliminate an entire roundtrip of latency and reduce computational overhead for repeat visitors.

Session identifiers, on which TLS session caching relies, were introduced in SSL ii.0 and take wide support amongst most clients and servers. However, if you are configuring TLS on your server, do not assume that session support volition be on by default. In fact, it is more common to accept it off on most servers past default—but yous know better! Double-check and verify your server, proxy, and CDN configuration:

-

Servers with multiple processes or workers should utilise a shared session enshroud.

-

Size of the shared session cache should exist tuned to your levels of traffic.

-

A session timeout period should exist provided.

-

In a multi-server setup, routing the same customer IP, or the same TLS session ID, to the same server is one way to provide good session cache utilization.

-

Where "viscous" load balancing is non an option, a shared cache should exist used betwixt different servers to provide good session enshroud utilization, and a secure mechanism needs to be established to share and update the secret keys to decrypt the provided session tickets.

-

Check and monitor your TLS session cache statistics for best performance.

In practice, and for best results, y'all should configure both session caching and session ticket mechanisms. These mechanisms work together to provide best coverage both for new and older clients.

§Enable TLS Imitation Start

Session resumption provides two important benefits: it eliminates an actress handshake roundtrip for returning visitors and reduces the computational price of the handshake by allowing reuse of previously negotiated session parameters. All the same, it does not help in cases where the visitor is communicating with the server for the first time, or if the previous session has expired.

To go the best of both worlds—a one roundtrip handshake for new and repeat visitors, and computational savings for repeat visitors—we can use TLS Fake Start, which is an optional protocol extension that allows the sender to send application data (Figure 4-10) when the handshake is merely partially consummate.

Faux Start does not modify the TLS handshake protocol, rather it merely affects the protocol timing of when the application information can be sent. Intuitively, once the client has sent the ClientKeyExchange record, it already knows the encryption key and tin can brainstorm transmitting application information—the residual of the handshake is spent confirming that nobody has tampered with the handshake records, and can be done in parallel. As a effect, False Outset allows us to go on the TLS handshake at i roundtrip regardless of whether we are performing a full or abbreviated handshake.

§Optimize TLS Record Size

All application information delivered via TLS is transported within a tape protocol (Figure iv-8). The maximum size of each record is 16 KB, and depending on the chosen goose egg, each tape will add anywhere from twenty to 40 bytes of overhead for the header, MAC, and optional padding. If the record then fits into a single TCP parcel, then we likewise have to add together the IP and TCP overhead: twenty-byte header for IP, and 20-byte header for TCP with no options. Equally a result, there is potential for sixty to 100 bytes of overhead for each record. For a typical maximum transmission unit (MTU) size of 1,500 bytes on the wire, this parcel construction translates to a minimum of 6% of framing overhead.

The smaller the record, the higher the framing overhead. Nonetheless, simply increasing the size of the tape to its maximum size (16 KB) is not necessarily a practiced thought. If the tape spans multiple TCP packets, so the TLS layer must expect for all the TCP packets to arrive before information technology can decrypt the data (Figure 4-xi). If any of those TCP packets get lost, reordered, or throttled due to congestion control, then the individual fragments of the TLS record will take to be buffered before they can be decoded, resulting in additional latency. In practice, these delays tin can create pregnant bottlenecks for the browser, which prefers to consume information in a streaming manner.

Pocket-size records incur overhead, large records incur latency, and there is no ane value for the "optimal" tape size. Instead, for spider web applications, which are consumed by the browser, the best strategy is to dynamically suit the record size based on the state of the TCP connection:

-

When the connectedness is new and TCP congestion window is low, or when the connection has been idle for some time (see Irksome-Start Restart), each TCP bundle should carry exactly ane TLS record, and the TLS record should occupy the full maximum segment size (MSS) allocated by TCP.

-

When the connection congestion window is large and if we are transferring a large stream (e.g., streaming video), the size of the TLS record can exist increased to span multiple TCP packets (upwardly to 16KB) to reduce framing and CPU overhead on the client and server.

If the TCP connectedness has been idle, and even if Slow-Outset Restart is disabled on the server, the best strategy is to decrease the record size when sending a new outburst of data: the atmospheric condition may take inverse since concluding transmission, and our goal is to minimize the probability of buffering at the application layer due to lost packets, reordering, and retransmissions.

Using a dynamic strategy delivers the best performance for interactive traffic: small-scale record size eliminates unnecessary buffering latency and improves the time-to-outset-{HTML byte, …, video frame}, and a larger record size optimizes throughput past minimizing the overhead of TLS for long-lived streams.

To determine the optimal tape size for each land let's get-go with the initial case of a new or idle TCP connection where we want to avoid TLS records from spanning multiple TCP packets:

-

Classify xx bytes for IPv4 framing overhead and 40 bytes for IPv6.

-

Allocate 20 bytes for TCP framing overhead.

-

Allocate 40 bytes for TCP options overhead (timestamps, SACKs).

Assuming a mutual 1,500-byte starting MTU, this leaves i,420 bytes for a TLS record delivered over IPv4, and 1,400 bytes for IPv6. To exist time to come-proof, use the IPv6 size, which leaves us with i,400 bytes for each TLS tape, and adjust as needed if your MTU is lower.

Adjacent, the decision as to when the record size should be increased and reset if the connection has been idle, can exist set based on pre-configured thresholds: increase record size to upward to 16 KB after X KB of data have been transferred, and reset the tape size after Y milliseconds of idle fourth dimension.

Typically, configuring the TLS record size is non something we tin command at the awarding layer. Instead, often this is a setting and sometimes a compile-fourth dimension constant for your TLS server. Check the documentation of your server for details on how to configure these values.

§Optimize the Certificate Chain

Verifying the chain of trust requires that the browser traverse the chain, starting from the site certificate, and recursively verify the certificate of the parent until it reaches a trusted root. Hence, information technology is disquisitional that the provided chain includes all the intermediate certificates. If any are omitted, the browser will be forced to intermission the verification procedure and fetch the missing certificates, adding additional DNS lookups, TCP handshakes, and HTTP requests into the procedure.

How does the browser know from where to fetch the missing certificates? Each kid certificate typically contains a URL for the parent. If the URL is omitted and the required certificate is not included, then the verification volition fail.

Conversely, do not include unnecessary certificates, such as the trusted roots in your certificate chain—they add unnecessary bytes. Retrieve that the server certificate chain is sent every bit part of the TLS handshake, which is likely happening over a new TCP connectedness that is in the early on stages of its tedious-start algorithm. If the certificate chain size exceeds TCP's initial congestion window, then nosotros will inadvertently add additional roundtrips to the TLS handshake: certificate length volition overflow the congestion window and cause the server to stop and wait for a client ACK before proceeding.

In exercise, the size and depth of the certificate chain was a much bigger business organisation and trouble on older TCP stacks that initialized their initial congestion window to 4 TCP segments—run across Tedious-Kickoff. For newer deployments, the initial congestion window has been raised to 10 TCP segments and should be more than sufficient for about certificate chains.

That said, verify that your servers are using the latest TCP stack and settings, and optimize and reduce the size of your document chain. Sending fewer bytes is always a good and worthwhile optimization.

§Configure OCSP Stapling

Every new TLS connection requires that the browser must verify the signatures of the sent certificate chain. However, in that location is ane more disquisitional step that we can't forget: the browser also needs to verify that the certificates have not been revoked.

To verify the condition of the certificate the browser can use one of several methods: Certificate Revocation List (CRL), Online Certificate Status Protocol (OCSP), or OCSP Stapling. Each method has its own limitations, but OCSP Stapling provides, by far, the best security and performance guarantees-refer to earlier sections for details. Make sure to configure your servers to include (staple) the OCSP response from the CA to the provided certificate chain. Doing so allows the browser to perform the revocation check without whatever extra network roundtrips and with improved security guarantees.

-

OCSP responses can vary from 400 to 4,000 bytes in size. Stapling this response to your document chain volition increase its size—pay close attention to the total size of the certificate chain, such that it doesn't overflow the initial congestion window for new TCP connections.

-

Current OCSP Stapling implementations only allow a unmarried OCSP response to be included, which means that the browser may have to fallback to another revocation mechanism if it needs to validate other certificates in the chain—reduce the length of your certificate concatenation. In the future, OCSP Multi-Stapling should address this particular problem.

Most popular servers support OCSP stapling. Bank check the relevant documentation for back up and configuration instructions. Similarly, if using or deciding on a CDN, check that their TLS stack supports and is configured to utilize OCSP stapling.

§Enable HTTP Strict Transport Security (HSTS)

HTTP Strict Transport Security is an of import security policy mechanism that allows an origin to declare access rules to a compliant browser via a simple HTTP header—e.g., "Strict-Transport-Security: max-age=31536000". Specifically, information technology instructs the user-agent to enforce the following rules:

-

All requests to the origin should be sent over HTTPS. This includes both navigation and all other same-origin subresource requests—e.g. if the user types in a URL without the https prefix the user amanuensis should automatically catechumen information technology to an https request; if a page contains a reference to a non-https resources, the user agent should automatically catechumen it to request the https version.

-

If a secure connectedness cannot exist established, the user is non allowed to circumvent the alarm and request the HTTP version—i.e. the origin is HTTPS-simply.

-

max-age specifies the lifetime of the specified HSTS ruleset in seconds (east.g.,

max-age=31536000is equal to a 365-solar day lifetime for the advertised policy). -

includeSubdomains indicates that the policy should apply to all subdomains of the current origin.

HSTS converts the origin to an HTTPS-only destination and helps protect the awarding from a diversity of passive and active network attacks. Every bit an added bonus, it also offers a squeamish performance optimization by eliminating the need for HTTP-to-HTTPS redirects: the client automatically rewrites all requests to the secure origin before they are dispatched!

Make sure to thoroughly test your TLS deployment before enabling HSTS. Once the policy is cached by the client, failure to negotiate a TLS connectedness will consequence in a hard-fail—i.e. the user will encounter the browser fault folio and won't be allowed to proceed. This behavior is an explicit and necessary design choice to forestall network attackers from tricking clients into accessing your site without HTTPS.

§Enable HTTP Public Key Pinning (HPKP)

1 of the shortcomings of the current system—as discussed in Concatenation of Trust and Certificate Government—is our reliance on a big number of trusted Certificate Regime (CA's). On the one hand, this is user-friendly, considering it means that we can obtain a valid document from a wide puddle of entities. Notwithstanding, it also means that any one of these entities is besides able to effect a valid certificate for our, and any other, origin without their explicit consent.

The compromise of the DigiNotar certificate authority is ane of several high-contour examples where an aggressor was able to issue and use fake—merely valid—certificates confronting hundreds of loftier profile sites.

Public Key Pinning enables a site to send an HTTP header that instructs the browsers to recollect ("pivot") one or more certificates in its certificate chain. Past doing so, it is able to scope which certificates, or issuers, should exist accepted by the browser on subsequent visits:

-

The origin can pin it'due south leafage certificate. This is the most secure strategy because yous are, in consequence, hard-coding a small set of specific document signatures that should be accustomed by the browser.

-

The origin can pivot one of the parent certificates in the certificate chain. For case, the origin can pin the intermediate certificate of its CA, which tells the browser that, for this particular origin, it should only trust certificates signed by that particular certificate authority.

Picking the right strategy for which certificates to pin, which and how many backups to provide, duration, and other criteria for deploying HPKP are of import, nuanced, and beyond the scope of our word. Consult your favorite search engine, or your local security guru, for more information.

HPKP also exposes a "report only" mode that does not enforce the provided pin but is able to written report detected failures. This can be a bang-up first step towards validating your deployment, and serve as a machinery to detect violations.

§Update Site Content to HTTPS

To go the best security and performance guarantees it is critical that the site actually uses HTTPS to fetch all of its resource. Otherwise, nosotros see a number of issues that will compromise both, or worse, interruption the site:

-

Mixed "active" content (e.g. scripts and stylesheets delivered over HTTP) will be blocked by the browser and may break the functionality of the site.

-

Mixed "passive" content (e.g. images, video, audio, etc., delivered over HTTP) will be fetched, but will allow the assaulter to observe and infer user action, and degrade performance by requiring additional connections and handshakes.

Inspect your content and update your resources and links, including third-political party content, to apply HTTPS. The Content Security Policy (CSP) mechanism tin can be of great help hither, both to identify HTTPS violations and to enforce the desired policies.

Content-Security-Policy: upgrade-insecure-requests Content-Security-Policy-Report-Only: default-src https:; report-uri https://instance.com/reporting/endpoint

-

Tells the browser to upgrade all (own and third-party) requests to HTTPS.

-

Tells the browser to report any non-HTTPS violations to designated endpoint.

CSP provides a highly configurable mechanism to control which nugget are allowed to be used, and how and from where they can exist fetched. Make use of these capabilities to protect your site and your users.

§Performance Checklist

Every bit application developers we are shielded from most of the complication of the TLS protocol—the client and server practise almost of the difficult work on our behalf. However, every bit we saw in this chapter, this does not mean that we can ignore the functioning aspects of delivering our applications over TLS. Tuning our servers to enable critical TLS optimizations and configuring our applications to enable the client to accept reward of such features pays high dividends: faster handshakes, reduced latency, better security guarantees, and more.

With that in heed, a short checklist to put on the calendar:

-

Get best performance from TCP; see Optimizing for TCP.

-

Upgrade TLS libraries to latest release, and (re)build servers against them.

-

Enable and configure session caching and stateless resumption.

-

Monitor your session caching hit rates and adjust configuration accordingly.

-

Configure forward secrecy ciphers to enable TLS Simulated Kickoff.

-

Terminate TLS sessions closer to the user to minimize roundtrip latencies.

-

Employ dynamic TLS tape sizing to optimize latency and throughput.

-

Inspect and optimize the size of your certificate concatenation.

-

Configure OCSP stapling.

-

Configure HSTS and HPKP.

-

Configure CSP policies.

-

Enable HTTP/2; encounter HTTP/two.

§Testing and Verification

Finally, to verify and test your configuration, you tin can use an online service, such as the Qualys SSL Server Exam to scan your public server for common configuration and security flaws. Additionally, you should familiarize yourself with the openssl command-line interface, which will aid yous inspect the entire handshake and configuration of your server locally.

$> openssl s_client -country -CAfile root.ca.crt -connect igvita.com:443 Connected(00000003) SSL_connect:before/connect initialization SSL_connect:SSLv2/v3 write client hullo A SSL_connect:SSLv3 read server how-do-you-do A depth=2 /C=IL/O=StartCom Ltd./OU=Secure Digital Document Signing /CN=StartCom Certification Authority verify return:1 depth=ane /C=IL/O=StartCom Ltd./OU=Secure Digital Document Signing /CN=StartCom Class 1 Chief Intermediate Server CA verify render:1 depth=0 /description=ABjQuqt3nPv7ebEG/C=U.s.a. /CN=www.igvita.com/emailAddress=ilya@igvita.com verify return:1 SSL_connect:SSLv3 read server certificate A SSL_connect:SSLv3 read server done A SSL_connect:SSLv3 write client key substitution A SSL_connect:SSLv3 write change null spec A SSL_connect:SSLv3 write finished A SSL_connect:SSLv3 affluent information SSL_connect:SSLv3 read finished A --- Certificate chain 0 s:/description=ABjQuqt3nPv7ebEG/C=US /CN=world wide web.igvita.com/emailAddress=ilya@igvita.com i:/C=IL/O=StartCom Ltd./OU=Secure Digital Document Signing /CN=StartCom Class 1 Primary Intermediate Server CA 1 southward:/C=IL/O=StartCom Ltd./OU=Secure Digital Certificate Signing /CN=StartCom Course one Primary Intermediate Server CA i:/C=IL/O=StartCom Ltd./OU=Secure Digital Certificate Signing /CN=StartCom Certification Authority --- Server certificate -----Brainstorm CERTIFICATE----- ... snip ... --- No client certificate CA names sent --- SSL handshake has read 3571 bytes and written 444 bytes --- New, TLSv1/SSLv3, Cipher is RC4-SHA Server public key is 2048 fleck Secure Renegotiation IS supported Compression: NONE Expansion: NONE SSL-Session: Protocol : TLSv1 Cipher : RC4-SHA Session-ID: 269349C84A4702EFA7 ... Session-ID-ctx: Master-Key: 1F5F5F33D50BE6228A ... Cardinal-Arg : None Commencement Time: 1354037095 Timeout : 300 (sec) Verify return code: 0 (ok) ---

-

Client completed verification of received certificate chain.

-

Received certificate concatenation (2 certificates).

-

Size of received certificate chain.

-

Issued session identifier for stateful TLS resume.

In the preceding example, we connect to igvita.com on the default TLS port (443), and perform the TLS handshake. Because the s_client makes no assumptions about known root certificates, we manually specify the path to the root document which, at the time of writing, is the StartSSL Certificate Authority for the case domain. Your browser already has common root certificates and is thus able to verify the chain, but s_client makes no such assumptions. Attempt omitting the root certificate, and yous will see a verification error in the log.

Inspecting the certificate concatenation shows that the server sent two certificates, which added up to 3,571 bytes. Also, we can see the negotiated TLS session variables—called protocol, zero, fundamental—and we tin also see that the server issued a session identifier for the current session, which may exist resumed in the hereafter.

Source: https://hpbn.co/transport-layer-security-tls/

0 Response to "Which of the Following Protocols Can Tls Use for Key Exchange"

Post a Comment